It’s been a while since I made a blog post, And I’ve just finished up a (relatively) quick switch over in my homelab network layout for inbound traffic to kubernetes - A perfect time to make a post about it!

It’s always a problem hosting public services at home, Because there’s a risk of exposing your home network to all sorts of nasty attacks, not to mention the amount of detail an IP address can reveal. So I’ve always been looking for ways to lock this down. In this post I’ll be going over how I currently manage the ingress (Inbound) traffic into my homelab. I’ll also summarise a few false starts along the way to what is currently a good solution for my needs.

Introduction & Background

My first thoughts were to use a virtual private server, with a reverse proxy and a VPN connection, aside from the fact that it defeats the purpose of a homelab, this brings additional cost, along with a whole pile of maintenance. It also doesn’t exactly guarantee security as the VPS is still a large attack surface, and would need a firewall and at minimum basic DDoS protection to consider.

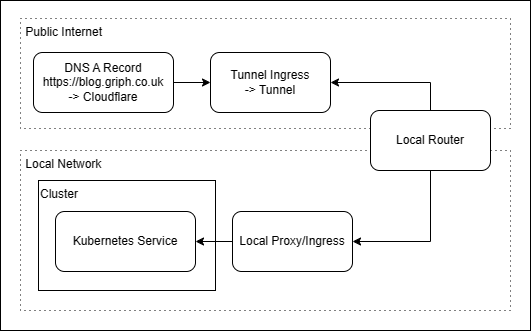

While looking for DDoS protection Cloudflare came up, Their proxying service points a DNS record (blog.griph.co.uk in this case) at cloudflare first, and then proxies the HTTP traffic to your local IP address. This also completely does away with the need for a VPS as cloudflare hides your IP behind a TCP proxy. I used this method with fairly little issue for a few months.

The main pain points with this setup is the need for a static IP address, which on a domestic broadband provider can be quite tricky to get hold of. Because of this I had to use a service to update the IP address in cloudflare via their API each time my ISP decided to give me a new public IP. Also, it still needs a proxy within the local network to terminate https and to forward on different subdomain traffic to the correct local service. On a self-hosted Kubernetes cluster, Ingress is not a trival task and requires some manual setup. MetalLB goes a long way to help this, providing LAN-level IP addresses exposing services outside the cluster, which can then be proxied by a local NGINX server.

The next logical step in this architecture would have been to setup a local ingress within the cluster, assign this a metalLB address and effectivly move the HTTP proxy into the cluster, however a better solution arose.

Cloudflare Tunnel

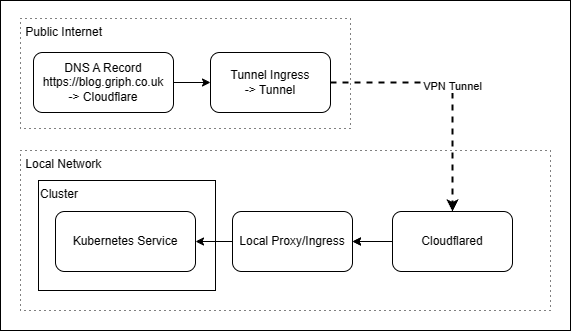

After getting bored with dealing with SSL certs between my gateway NGINX and cloudflare, I decided that a VPN might not be so bad after all - As look would have it however, Cloudflare have their own solution for this, Cloudflare Tunnel Which handles all the complex connection and config around the VPN setup, without needing a VPS. This also has the added advantage of not needing any open ports on my home network - plugging up security holes and removing the need for a static IP.

Cloudflare routes traffic through a VPN tunnel to the locally running Cloudflared service, which then forwards this on to local network services. Configuration can be done either locally in the service, or remotely through the zero-trust dashboard.

For the last year or so, the cloudflared service has been ticking away nicely on my raspberry pi 4.This did however still leave one problem - Redundancy. My 3 node K8s cluster has built in fail-over and resilience, But if the pi needs updates or restarting then ALL of my services are down - as the tunnel is not there to receive traffic.

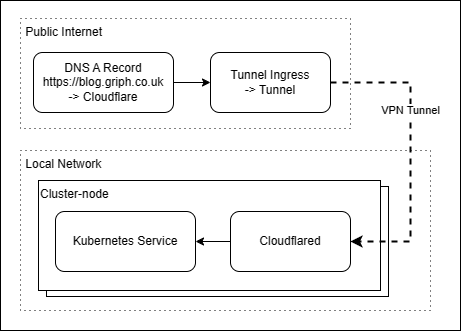

Kubernetes

The more astute readers may have already worked out the next step, just run Cloudflared in the cluster! Rather than running cloudflared as a service on my raspberry pi, instead run it in a container, on the cluster itself. Cloudflare tunnel allows multiple instances of cloudflared to connect with the same tunnel authentication and will load balance between them, meaning that even if a node goes offline, a tunnel will still be avaliable to serve traffic on another node. This is achieved by running one cloudflared container per node, as a daemonset within kubernetes.

Summary

to sum up, a neat solution to a problem I created for myself, and hopefully one that will work solidly into the future.

Update (2023-09-07) I’ve been using this setup for a year now, and its been rock solid, setting up new sites is simple and i’ve had no isses with cloudflare.

Documentation

For in depth instructions on setting this up check out Cloudflare’s well detailed documentation, avaliable here.